Unpredictable Patterns #122: The return of filing

Structure, search, cost and recorded societies

Dear reader,

This week was spent in Warzaw, where I managed to get a cold. Looking back at my old notes, I find I often am sick at this time of the year, so there is something happening here that is cyclical. And I know this because I keep a journal, and have - some - of my files in order. Up until now that has seemed terribly old-fashioned, but maybe filing is on its way back!

TL;DR

TL;DR: Filing is back. Search made structure look optional; large language models show that messy data could equal bad answers. Tag meetings, keep daily logs, prune on a schedule—minimal filing could deliver outsized AI gains. The recorded organization, and society, are going to open new policy issues as well.

Filing

One way to think about socities is to look at how they organize their memories. Libraries, archives and recordings make up the material memory, and the processes and tools we have access to define the access we have to this material memory.

One way to think about material memory is to look at how organized it is - that is, how structured our storage of the memories are. Libraries are a good example: they are highly structured, with indices that allow us to find the right book when we need it. The web, in comparison, brought about a less structured social memory where we relied on search to find what we needed. LLMs now represent an interesting shift: our social memory is now probabilistically structured in large models that can predict the next token.

Probability plays a role in libraries too. One way to think about cataloguing is that it is a game that a librarian plays with potential library users: the librarian is trying to predict the search strategies of the library user, cataloguing a new piece in such a way that the probability of it matching a predicted search is optimized. The structure - Dewey decimal - and the classification of an individual item are both moves available to the librarian in this game.

But the librarian also plays a normative game: they are prescribing a division of information into domains that are socially shared. This is not a bad thing - on the contrary: it is an added value to the library, since the domains thus produced create a common frame of reference.

When it comes to LLMs we can combine them with more structured data, shifting the probabilities in ways that produce higher value inputs. Retrieval-Augmented Generation is a variation on this theme - as are other techniques. And maybe what this means is that we need to revisit an old idea: the practice of filing.

Filing: a brief history

The modern filing cabinet emerged as an epistemic machine that reorganized how institutions could think. Before the 1890s, correspondence lived in pigeonholes and chronological letter books—a temporal logic that reflected how business unfolded day by day. The vertical filing system, patented by Edwin Seibels in 1898, imposed instead an alphabetical and categorical logic that detached documents from their historical sequence and reconstituted them as searchable facts. This represented a new form of institutional memory that could be interrogated rather than simply recalled.

Filing cabinets arrived precisely when large organizations needed to manage unprecedented volumes of information—insurance companies calculating actuarial risks, corporations tracking geographically dispersed operations, government bureaucracies administering expanding populations. The technology did not simply respond to these needs but actively shaped them, creating new possibilities for what historian Peter Galison would call "control and prediction." A filed document became simultaneously a historical record and a deployable resource, abstracted from its original context and made available for forms of analysis that would have been unthinkable under the old temporal systems.

The digital revolution promised to eliminate filing altogether, yet paradoxically restored its epistemic function in new forms. Google's PageRank algorithm and library science's Dublin Core metadata both represent attempts to solve the same problem that plagued Melvil Dewey: how to organize knowledge so that it can be systematically retrieved and recombined. Today's vector databases and knowledge graphs are filing cabinets reimagined for probabilistic rather than categorical logic - and this is where things start to become interesting.

The economics of filing

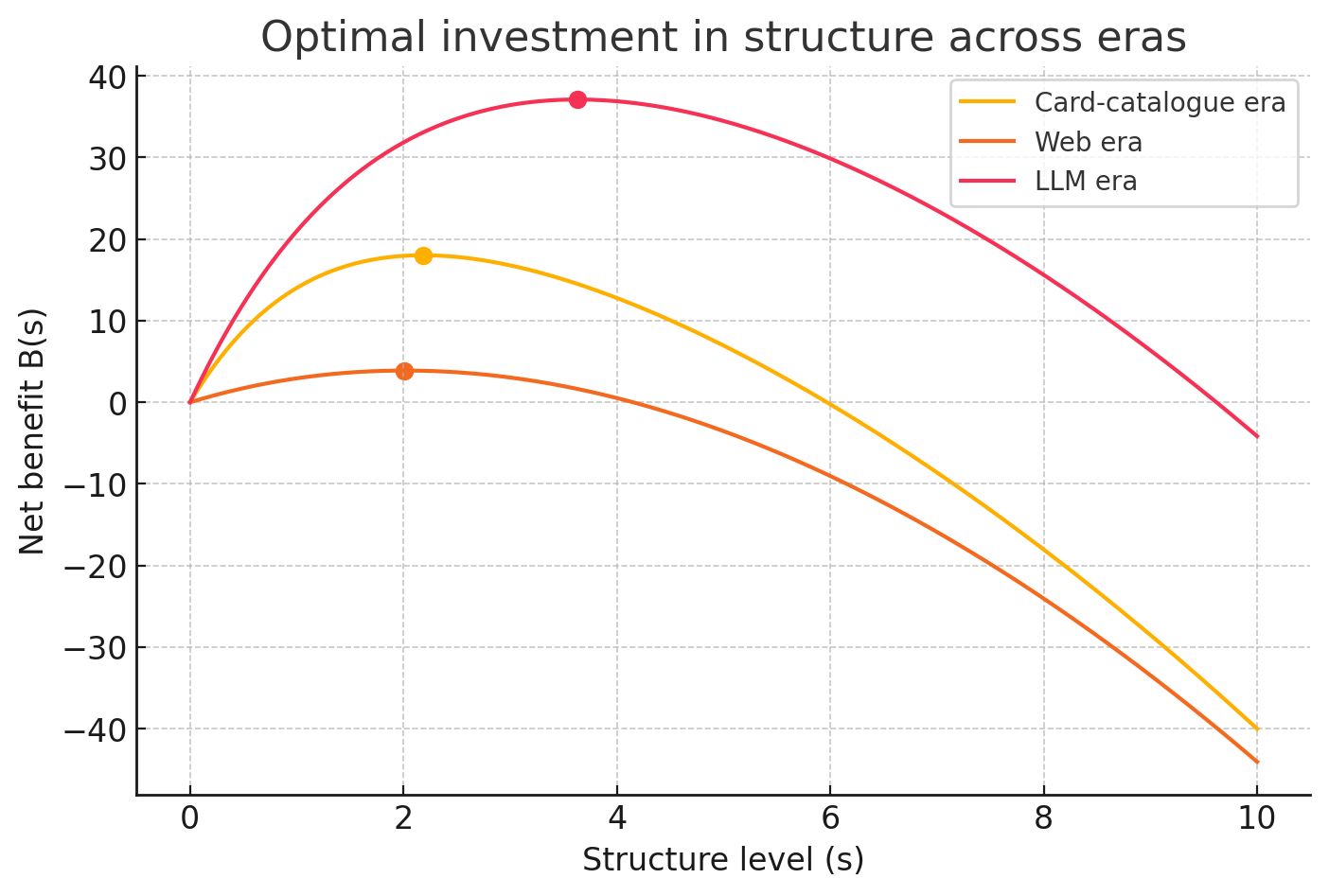

Structure is costly, and producing structured social or organizational memory is costlier than just storing things hap-hazardly. The cost / benefit equation for structure also varies with existing technological bases. One way to model ity would be to explore how it changes across our library / web / llm technologies.

To do that we can play with a simple model, that would look something like this:

Where:

Picture a scoreboard called B(s) that says whether adding more structure s (tags, categories, ontologies) to your information is worth it. The first positive term, R(q,s), measures how much faster you can find things; its boost shrinks when your search tool q is already strong. The second positive term, P(a,s), measures how much better an AI of power a performs when your data are tidy; it is zero without AI, but climbs rapidly once a large model starts tripping over messy notes. Against these you subtract C(s), the cost of cataloguing, which rises steeply because easy labels are cheap while deep cataloguing is labor-intensive.

Historically, when card catalogues ruled, search was weak, so extra structure paid off. The web’s full-text search made q huge, shrinking the retrieval gain, and many stopped investing in organisation. Now large language models add a big predictive payoff, making P so large that it outweighs the rising cost again. In short, structure matters once more—not mainly for humans to look things up, but to keep AI from hallucinating and to wring more reliable answers from the same data.1

This gives us different benefit curves for different technologies. We can debate the values, the differences and so on - but let’s allow, for the sake of argument, that there is a difference here. What, then, does this mean?

Re-learning filing

One thing it means is that organizations have to re-learn structure, and re-invent filing. Not return to the old ways of filing and structuring social and organizational memory - but re-invent them.

The digital skills most firms shed over the last 20 years—taxonomies, controlled vocabularies, disciplined metadata—have suddenly become strategic again. So the first task is simply to remember what our grandparents knew: that filing is infrastructure.

Relearning structure is about blending classic librarianship with today’s graph and vector tooling. A minimum viable reboot can be as lightweight as enforcing canonical IDs for people, products, and contracts, then wiring those into a retrieval-augmented pipeline. Next come shared ontologies for domain concepts and provenance stamps so every fact can be traced. All of this can be crowd-tagged internally—think “corporate Wikipedia”—then hardened where the stakes are highest (compliance, pricing, safety). The payoff scales non-linearly: once even 10–20 percent of critical data are well-filed, the model’s hit rate rises enough to make further structure self-funding.

Because the gains compound, the first organisation to cross that modest threshold wins twice: it gets more reliable outputs immediately and it learns, before its rivals, which filing practices matter most. That feedback loop locks in advantage; laggards will struggle to catch up because their own tools keep misleading them, obscuring the very errors they need to fix. In short, the new competitive frontier isn’t bigger models, it’s better memory—who can impose just enough order, soonest, to let the AI run safely and fast.

This requires a culture shift: logging becomes as non-negotiable as sending calendar invites, and managers are judged on the clarity of their logs as much as on KPIs. IT must embed templates into recording tools, HR must reward compliance, and leadership must model the habit by maintaining their own visible logs. The payoff is compounding: once, say, 10–20 % of critical knowledge is captured this way, AI systems start delivering more reliably, reinforcing the habit and freeing time to deepen the structure where it matters most.

Developing new filing habits may be a key competitive advantage, perhaps much to everyone’s surprise.

The recorded organization - and the recorded society

If we follow this trend we can see a new kind of organization, and even society, emerge. One in which events, discussions, decisions are recorded and structured in different ways. The emergence of this new kind of shared memory is, of course, not without challenges. The obvious one is privacy. In a recorded society, privacy is more or less eliminated - especially if access is open and available to all. If it is not, well, then we have built surveillance tools that a malicious government could abuse. So just tweaking access seems difficult. Seeking aggregations and different kinds of structure that allow for privacy could work, but is really hard - especially if we have access to more than one data set and combined data to re-identify individuals. At some point, then, we might have to institute social forgetting, much like Viktor Mayer-Schönberger argued already in the late 1990s: but not as an individual right to be forgotten, as much as a social institution. If we want to be fanciful we could imagine agencies tasked with social forgetting, or standards for social memory degradation over time.

Recorded organizations will certainly be asked for their retention policies, and forgetting practices. Technologies and methods for aggregating insights and deleting the underlying recordings will evolve faster, and hopefully we will be able to find a sound balance between memory and privacy - although we should assume that authoritarian states will be less fussed. There, being a part of the nomenklatura will mean being forgotten, lost to memory at will — whereas the oppressed will be remembered with a vengeance.

Filing is a dangerous tool in the hands of a dictator.

Policy proposals for the new age of filing

Let’s have a look at - tongue in cheek - what some interesting policy proposals could look like in this space. The following are some sketches and ideas for policies that could become relevant as filing makes a comeback.

1. The National Digital Infrastructure Act (NDIA)

To address the emerging "structuring gap" and secure our long-term economic competitiveness, we propose the creation of the National Digital Infrastructure Authority. This public-private body will be tasked with accelerating the adoption of structured data practices across key industries. Rather than letting market forces create a small number of dominant firms and leaving SMEs behind, the NDIA will fund the development of open-source, sector-specific ontologies and controlled vocabularies (e.g., for manufacturing, life sciences, and green technology). It will provide grants and training to help companies re-skill their workforce in data governance and taxonomy management, treating these skills as critical national infrastructure. The goal is not to pick winners, but to raise the entire ecosystem's capacity to build the reliable, structured memory required to effectively deploy AI, ensuring a broad-based competitive advantage.

2. The Information Integrity and Obsolescence Act

Recognizing that total societal recording is incompatible with core democratic freedoms, we propose the establishment of an Information Integrity and Obsolescence Authority. This agency will move beyond the reactive "right to be forgotten" by designing and mandating a national framework for proactive, institutional forgetting. It will establish technical standards for data degradation, requiring that certain classes of data (e.g., recordings of routine meetings, location data, non-critical public discourse) be automatically aggregated and their raw forms deleted after a statutorily defined period. The Authority will function as an independent arbiter, balancing the public's interest in historical records against the societal need for privacy and the capacity for renewal, thereby preventing the state's memory from becoming a tool of permanent surveillance and social control.

3. The Digital Work Environment Act of 2026

To protect workers' rights within the "recorded organization," we propose an amendment to existing labor law, the Digital Work Environment Act. This legislation will establish a worker's "right to data portability and opacity," granting employees ownership of their personal contribution logs and the right to take this structured record with them between jobs. Crucially, it will mandate algorithmic transparency in any performance review that relies on the analysis of these logs, giving workers the right to inspect the criteria used and contest biased or flawed automated evaluations. It will also define a clear boundary between legitimate organizational knowledge capture and invasive employee surveillance, ensuring that the drive for structured memory does not eliminate the private spaces required for professional autonomy and development.

4. The Public Memory Modernization Mandate

We propose a modernization of the mandate for our national libraries and archives, re-envisioning them as providers of foundational "public good" data for the AI era. These institutions will receive dedicated funding to create, maintain, and serve high-quality, structured datasets and APIs for essential public information (e.g., canonical lists of laws, regulations, public officials, and government-funded research). This "Public Memory API" will serve as a free, reliable baseline of ground truth for both public and private AI developers, reducing AI hallucinations and the societal cost of misinformation. By investing in our public memory institutions as core digital infrastructure, we provide the reliable foundation upon which a trustworthy and innovative national AI ecosystem can be built.

5. The Coalition for Democratic Memory and Export Control Reform

In light of the clear dual-use nature of societal recording technologies, we propose a two-pronged foreign policy initiative. First, we will update our export control regulations to classify advanced tools for mass data structuring and analysis as "societal memory architectures" subject to review, preventing their sale to authoritarian regimes where they would be used as instruments of oppression. Second, we will launch a diplomatic initiative to form a "Coalition for Democratic Memory" with allied nations. This coalition will collaboratively develop and promote an open-source "Democratic Memory Stack"—a suite of technologies for organizational and societal record-keeping with privacy, ephemerality, and individual rights engineered into its core—as a global standard and a direct ideological competitor to the surveillance-first models being exported by authoritarian states.

None of these proposals are likely to manifest exactly like this anytime soon - but they outline questions we will need to look more closely at nevertheless.

Thanks for reading,

Nicklas

Think of s as how organized your information is - like the difference between having all your notes scattered randomly versus having them in perfectly labeled folders with an index.

B(s) is the total benefit you get from that level of organization. It's made up of three parts:

R(q,s) is the "search value" - how much organizing helps you find stuff. The formula says this benefit grows quickly at first when you add some organization (going from total chaos to basic folders), but then levels off. It's like diminishing returns - the first bit of organization helps a lot, but making it even more organized doesn't help as much.

P(a,s) is the "AI prediction value" - how much your organized data helps AI systems work better. The a parameter is like a switch that gets turned on in the AI era. When a=0 (pre-AI times), this whole term disappears. When a=1 (AI era), suddenly organized data becomes super valuable for training models and making predictions.

C(s) is the cost of organizing everything. This grows faster and faster as you make things more organized - it's expensive to maintain perfect organization.

R(q,s) = R_max(1 - e^(-k(q)s))

This is an exponential saturation curve for search value.

R_max = the maximum possible benefit you can get from search

k(q) = how "search-sensitive" your system is (q represents search quality)

The e^(-k(q)s) part starts at 1 when s=0, then shrinks toward 0 as s increases

So (1 - e^(-k(q)s)) starts at 0 and grows toward 1

Think of it like learning to ride a bike - the first few attempts give you huge improvements, but once you're decent, extra practice gives smaller and smaller gains. Same with organization: going from chaos to basic folders is huge, but going from good organization to perfect organization barely helps your search.

The k(q) parameter captures different eras:

Library era: k is high (structure really matters for finding things)

Google era: k gets lower (good search works even with messy data)

P(a,s) = a P_max(1 - e^(-k_p s))

This is the AI prediction value, with the same saturation shape.

a is the "AI switch" - it's 0 in pre-AI eras, then jumps to 1 when AI arrives

P_max = maximum benefit AI can get from structured data

k_p = how quickly AI benefits scale with structure

The cool thing is this term is completely dormant (a=0) until the AI era, then suddenly explodes into importance. It's like having a hidden superpower that only activates under certain conditions.

C(s) = c₁s + c₂s²

This is quadratic cost growth - costs that accelerate.

c₁s = linear costs (basic overhead of any organization)

c₂s² = the accelerating costs (perfectionism gets expensive fast)

Think about organizing your room: basic tidying is easy, but achieving magazine-perfect organization requires exponentially more effort. The s² term captures how the last 10% of organization often costs as much as the first 90%.

At a time when I was just about to get rid of a lot of my attention to detail with regard to filing because AI is typically not worse in recovering my ideas than my Zettelkasten, this gave me a pause ... I was also intrigued by the "right to portability" and would love to read more about this in the future!