Unpredictable Patterns #112: The tokenized economy

On semiotics, tokenization and the future of work, tasks and jobs

Dear reader,

Last week’s note generated a lot of generous commentary - the subject of time remains underanalyzed in many ways. I will also admit that my simplified view of the increasing processor capacity (it is all shrinking) leaves out a whole lot of story (although I remain ok with that, for now). This week we are doing something else, but related. We are exploring how the process of tokenization can give us an interesting mental model of AGI’s impact on the economy. This is a nerdy one, but one that I think is interesting nevertheless.

The History and Philosophy of Tokenization

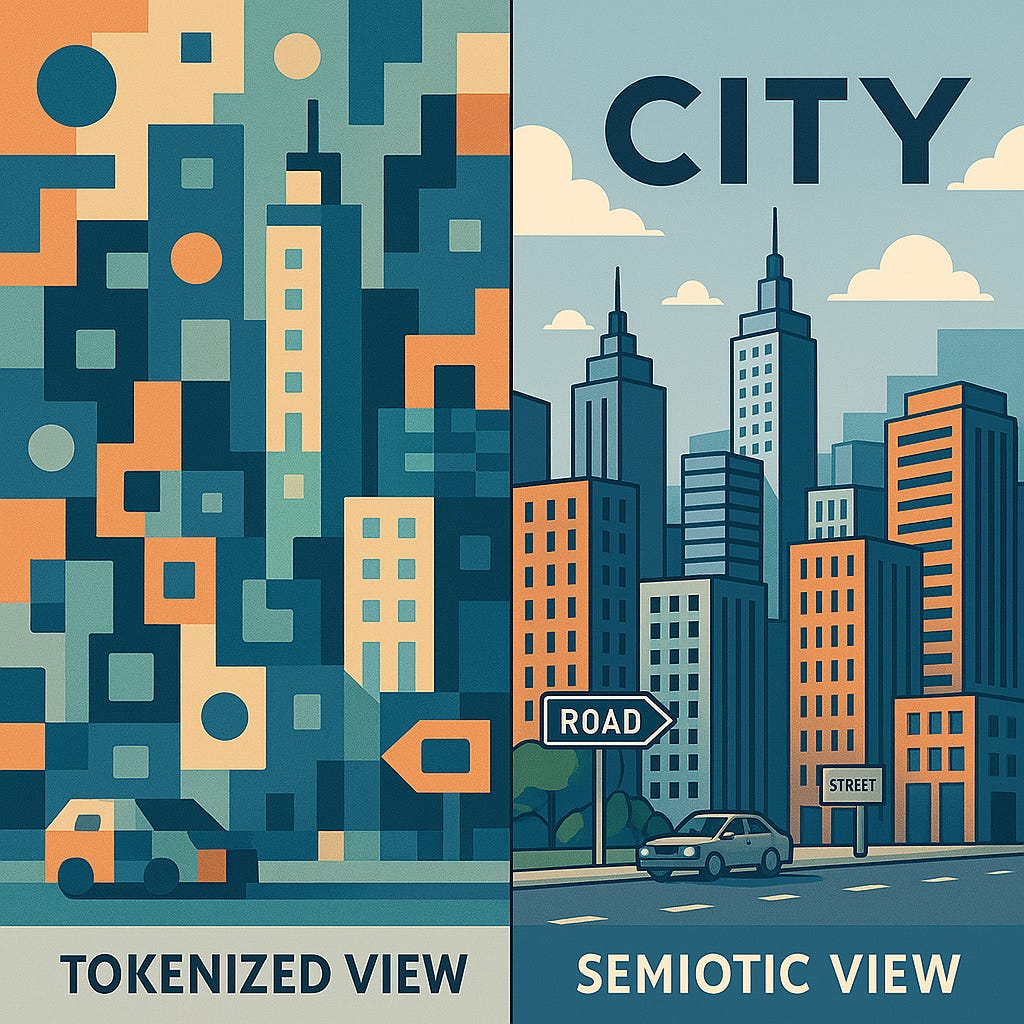

When we consider how artificial general intelligence might reshape our economy, we often reach for familiar metaphors: intelligence explosions, automation waves, or computational resource allocation. Yet these frameworks may obscure what is truly novel about AI's relationship to economic systems. I want to explore the idea here that tokenization—the process of breaking continuous information into discrete, manipulable units—offers a more illuminating lens for understanding this transformation.

Tokenization as a concept predates modern computing. Human civilizations have always engaged in forms of discretization—breaking continuous reality into manageable chunks. Language itself represents perhaps the first systematic tokenization of experience, with words serving as manipulable tokens that stand in for objects, actions, and concepts. Currency functions similarly, tokenizing value exchange. These systems share a common pattern: they transform the continuous and ineffable into the discrete and transmissible.

What makes AI tokenization distinctive is its mechanical nature and unprecedented scale. When large language models process text, they don't engage with meaning as humans do—they first convert language into numerical tokens, applying statistical patterns to predict what follows. This process feels fundamentally different from human language comprehension, which remains embedded in embodied experience and social context. The AI doesn't "know" what the tokens represent in the lived world; it manipulates them according to patterns observed across vast training data. Now, it may still be that at some level this is what happens in our minds as well — but that remains to be proven.

In practice, tokenization in large language models works by breaking down text into subword units using algorithms like Byte-Pair Encoding (BPE) or WordPiece.1 These algorithms iteratively merge the most frequently co-occurring character sequences in the training corpus, creating a vocabulary of tokens that may represent characters, partial words, complete words, or even common phrases. For instance, the word "tokenization" might be broken into the tokens "token" and "ization," while common phrases like "New York" might be represented as a single token. This process is language-agnostic and driven purely by statistical co-occurrence. As Peng et al. demonstrate, this statistical approach to chunking text differs markedly from human linguistic intuitions about word boundaries and semantic units.2 The model then converts these tokens into numerical vectors which become the actual units of computation, creating what Vaswani et al. (2017) describe as an embedding space where similar tokens cluster together—a kind of computational semantics divorced from grounded meaning.3

This distinction could matter when we consider economic impact. Our economy—until now—has been designed around human cognitive capabilities. Markets, organizations, and institutions evolved to complement our particular modes of information processing, social coordination, and value exchange. As tokenized machine intelligence enters this ecosystem, it doesn't simply augment existing processes but reorganizes them according to its own operational logic.

Consider how the tokenizing lens differs from automation narratives. Traditional automation replaces specific human physical or cognitive labor with machines that perform equivalent functions. Tokenization, by contrast, restructures the underlying information architecture of entire domains. It doesn't just replace the worker; it reconceptualizes the work itself as sequences of discrete, predictable patterns—patterns that may bear little resemblance to how humans categorize tasks.

The history of computing offers instructive parallels. Early programmable computers didn't merely automate calculation; they transformed our conception of what calculation could be. Similarly, databases didn't just organize information more efficiently; they changed what counted as information and how it could be structured and queried. Each advance in computing has reinterpreted reality through the lens of what can be discretized and algorithmically manipulated.

The tokenization metaphor also illuminates the economic reorganization already underway. Consider how digital platforms have restructured industries by tokenizing previously continuous experiences. Uber tokenized transportation into discrete, algorithmic units; Airbnb did the same for accommodation; TikTok for entertainment. Each platform succeeded by reconceptualizing an industry in terms that could be discretized, quantified, and optimized according to computational logic.

I think this comes to close to what Andreesen meant when he noted that software will “eat” everything - but in order to do that it first needs to digest the world, into tokens.4 Now, this is not a new phenomenon in economy - it is a version of what is sometimes called modularization, but driven by different mechanisms.5

AGI promises to accelerate this transformation by applying tokenization to domains previously resistant to discretization—including creative work, strategic decision-making, and interpersonal services. This isn't simply automation; it's a fundamental reorganization of economic activities around what can be effectively tokenized and what cannot.

The tokenization lens also helps us understand why AI capabilities can seem simultaneously impressive and alien. Large language models can generate text that appears remarkably human-like, yet their process bears little resemblance to human writing. They aren't thinking beings with intentions communicating ideas; they're statistical systems predicting which tokens typically follow others in similar contexts. The gap between process and output helps explain why AI-generated content can feel uncanny—convincing at a surface level yet somehow missing the deeply human qualities we unconsciously expect.

This is a large part of the basis of the tension between comprehension and competence that Dennett alerted us to.6

Tokenization, at its core, represents a transformation process: taking continuous or unstructured data and converting it into discrete units suitable for computational manipulation. This process isn't unique to language models—it's fundamental to how machines process any form of information. To understand tokenization's economic implications, we must first formalize its underlying logic.

Any dataset can be tokenized according to multiple possible schemas, each creating different divisions of the original data. Formally, we can define a tokenization T as a mapping function from raw data D to a set of discrete tokens {t₁, t₂, ..., tₙ} according to some schema S:

T(D, S) → {t₁, t₂, ..., tₙ}

The schema S determines how boundaries between tokens are identified and what constitutes a meaningful unit. Crucially, there is no single "correct" tokenization of a dataset. Rather, tokenization schemas are evaluated pragmatically based on their effectiveness for downstream tasks—primarily prediction accuracy and computational efficiency.

Seminal research by Mielke et al. (2021) demonstrates this empirically, showing how different tokenization strategies for the same language corpus lead to different model performance characteristics.7 Their work reveals that optimal tokenization may vary depending on the language structure and the specific task. Similarly, Bostrom and Durrett (2020) suggest that tokenization choices significantly impact a model's ability to handle rare words and morphologically complex languages, with consequences for overall predictive performance.8

For multilingual models, the challenge becomes even more apparent. As shown by research, models trained on multiple languages must develop tokenization strategies that work across linguistic boundaries with different morphological structures. Languages with rich morphology like Finnish or Turkish present different tokenization challenges than isolating languages like Chinese or analytic languages like English. The compromise solutions often involve tokenization patterns that wouldn't be intuitive to native speakers of any particular language.9

Multimodal data introduces yet another dimension of complexity. When tokenizing images alongside text, researchers like Alayrac et al. (2022) must develop strategies for creating compatible token spaces across fundamentally different data types. Their CLIP and Flamingo models tokenize images into grid patches and text into subword units, then align these disparate token spaces through joint training. The resulting tokens bear little resemblance to human perceptual units in either domain.10

What unites these various approaches is their fundamentally statistical nature. Tokens aren't chosen based on semantic meaning or natural boundaries in the data, but on what divisions optimize for predictive power within computational constraints.

Tokenization vs. Semiotics

This statistical approach to discretizing information stands in contrast to human semiotic systems. Semiotics—the study of signs and symbols and their use or interpretation—provides a useful counterpoint for understanding what makes machine tokenization distinctive.

In semiotic theory, dating back to Ferdinand de Saussure and Charles Sanders Peirce, signs consist of a signifier (the form the sign takes) and the signified (the concept it represents). The relationship between these elements is established through cultural convention and cognitive association. Signs are embedded in complex networks of meaning where interpretation depends on context, cultural knowledge, and embodied experience.

Crucially, semiotic systems evolve through social processes and embodied experience. As Eco (1976) argues, signs gain meaning through their use in communities of interpreters who share contexts and experiences. Signs are grounded in human perception, cognition, and social interaction—they reference an experienced world.11

So, does that mean that human cognition fundamentally is not tokenized? The evidence suggests both yes and no. Cognitive science reveals that our thinking involves both discrete symbolic manipulation and continuous, embodied processes. We chunk experiences into conceptual tokens, yet these tokens remain grounded in embodied sensorimotor systems and emotional contexts. Our cognitive tokens aren't purely symbolic—they carry affective weight and sensory associations that resist complete formalization.

Tokenization, by contrast, creates units based purely on statistical co-occurrence patterns. The "meaning" of a token to an AI system isn't referential but distributional—defined entirely by the token's relationships to other tokens in high-dimensional vector space. As Bender and Koller (2020) forcefully argue, these systems don't have access to the grounding that gives human signs their reference and meaning.

This fundamental difference creates two distinct ways of chunking the world:

Semiotic chunking divides reality according to human perceptual systems, embodied experience, and social conventions. Words in human language largely correspond to perceptually or functionally distinct entities and actions in our experienced world. The boundaries between signs typically align with meaningful distinctions in human experience.

Computational tokenization divides data according to statistical patterns and computational efficiency. Token boundaries need not correspond to meaningful distinctions in human experience. A single meaningful unit in human terms might be split across multiple tokens, while frequently co-occurring elements might be merged into a single token regardless of their semantic relationship.

These different chunking systems not only produce different operational logics but also evolve through fundamentally different mechanisms. Tokenization systems evolve through optimization processes aimed at maximizing predictive capability—the tokens that most efficiently enable the system to predict the next token or classify inputs correctly survive and proliferate. This evolution happens rapidly through computational optimization, guided by explicit mathematical objectives and constrained primarily by computational resources.

Semiotic systems, by contrast, evolve through complex social and cognitive processes that resemble Darwinian evolution more than computational optimization. As Deacon (1997) argues in "The Symbolic Species," linguistic signs undergo selection pressures that extend far beyond mere predictive utility.12 They evolve to serve multiple competing functions: facilitating social cohesion within groups, marking boundaries between social groups, enabling precise reference to shared experiences, supporting creative metaphorical extension, and maintaining backward compatibility with existing sign systems. The survival of signs depends on their fitness across this multidimensional landscape of social, cognitive, and practical functions.

Bourdieu's concept of "linguistic capital" further deepens this distinction.13 Signs carry social value and serve as markers of identity and status within communities. Their evolution responds to social power dynamics that have no direct analog in computational tokenization. Similarly, as Tomasello (2010) demonstrates, human signs evolve through collaborative processes of joint attention and shared intentionality—cognitive capacities unique to human social interactions that shape how our semiotic systems develop.

These different evolutionary mechanisms produce different operational logics. Human semiotic systems excel at grounded reference, metaphorical extension, and contextual interpretation. They're optimized for communication between embodied, socially-embedded agents with shared experiences. Tokenization systems excel at pattern recognition and statistical prediction across massive datasets. They're optimized for computational efficiency and generalization from observed patterns.

In human semiotic systems, meaning emerges through recursive loops between experience, language, and thought. Signs point to experiences which themselves are partly structured by our symbolic systems. Tokenization, lacking this grounding loop, creates a different kind of system—one that can capture statistical shadows of meaning without accessing the experiential foundation that makes human signs meaningful.

For economic analysis, this distinction matters profoundly. When we evaluate which economic activities might be transformed by AI, we typically conceptualize those activities in terms of human-meaningful chunks—tasks, skills, and jobs that make sense within our semiotic understanding of work. But AI systems will operate according to their own tokenization logic, potentially reorganizing economic activities along entirely different boundaries.

This mismatch between semiotic and tokenized representations also helps explain why AI systems can simultaneously appear remarkably capable yet strangely brittle. They operate fluently within the statistical patterns of their training data but lack the grounding that helps human signs maintain consistent reference across contexts.

The economic consequences of this distinction are only beginning to emerge. As AI systems reshape industries, they won't simply replace human labor within existing categories; they'll reconfigure activities according to their own operational logic and ontologies. Understanding this reconfiguration requires attention to how tokenization differs from human semiotic categories, and how these different chunking systems might interact in emerging socio-technical systems.

The Semiotic Immune System

The conventional analysis of how artificial intelligence will impact the economy assumes that AI systems will interact with economic activities as they are currently organized—replacing or augmenting human labor within existing task categories, job descriptions, and industry sectors. But the tokenization perspective suggests something more profound: AI systems may fundamentally reorganize economic activities according to a different operational logic—one that doesn't respect our semiotic categories.

However, this reorganization will face what we might call a "semiotic immune system"—the entrenched categorization schemes that structure current economic activities. This immune system manifests in multiple forms: legal and regulatory frameworks that define occupations and industries; educational systems that credential workers according to established skill taxonomies; organizational structures that divide labor into standardized roles; and social norms that attribute meaning and value to particular forms of work.

This semiotic immune system creates significant friction against rapid tokenization of economic activities. Consider healthcare as an example. The practice of medicine is organized around deeply entrenched semiotic categories—specialties defined by body systems, diagnostic codes grouped by disease etiology, treatment protocols segmented by intervention type. These categories are embedded in everything from medical education to insurance reimbursement structures to hospital design. They form a complex web of interdependent semiotic chunks that resist reorganization.

This friction suggests that the shift toward tokenized economic activity may indeed progress more slowly than simple task replacement. As Brynjolfsson, Rock, and Syverson (2019) argue in their work on the "productivity J-curve," major technological transformations often involve substantial adjustment costs and complementary investments before their full impact materializes. The reorganization of economic activity around AI-native tokens represents precisely such a transformation—requiring not just new technical capabilities but new organizational forms, regulatory frameworks, and social understandings.14

Why Would AGI Not Just Adopt Semiotic Categories?

Given these frictions, a natural question arises: Why wouldn't AI systems simply adopt existing human semiotic categories? If human-designed categories structure current economic activities, wouldn't it be more efficient for AI systems to conform to these established patterns rather than imposing their own tokenization?

The answer, I think, lies in the fundamentally different operational logics of the two systems. AI systems don't "choose" their tokenization strategies based on convenience or compatibility with existing structures. Rather, their tokenization emerges from the statistical patterns in their training data and the optimization processes that maximize predictive capability. If the most predictively powerful patterns don't align with human semiotic categories, the AI system won't "decide" to adopt those categories—it will operate according to the patterns it has identified.

Consider how this plays out in language models. These systems don't choose to tokenize language into words, morphemes, or phonemes because linguists have identified these as meaningful units. They tokenize language into statistically frequent character sequences, which often cut across linguistic categories. The model doesn't "know" what a morpheme is; it knows only the patterns that most efficiently enable prediction. And we may see tokenization evolve to completely different patterns in the future as well.

AI systems could potentially be engineered to respect human semiotic categories—essentially forcing their tokenization to align with our existing chunking of the world. This would involve artificially imposing human category boundaries on the system's operations. But as Rahwan et al. (2019) argue in their work on "machine behavior," artificially constraining AI systems to human interpretable patterns may create significant limitations, preventing discovery of novel structural relationships that exist outside our conceptual frameworks.15

Crucially, adopting semiotic framing imposes an upper bound on potential efficiency gains and productivity growth. If AI systems are constrained to operate within human task categories, their maximum potential improvement is essentially the speed at which human tasks can be performed. This creates an automation trap—where focusing on automating existing processes limits the transformative potential of new technologies.16

Tokenized frameworks, by contrast, have no such upper bound. By reorganizing economic activities according to patterns that may have no intuitive human parallel, they can potentially discover entirely new modes of production and service delivery that transcend the limitations of activities organized around human cognitive and perceptual categories. The history of technological revolutions, as documented by Perez (2010), shows that the most profound economic transformations don't merely accelerate existing processes but fundamentally reorganize activities in ways that would have been inconceivable in the previous paradigm.17.

A Toy Model of Tokenization vs. Semiotic Economies

To understand how tokenized and semiotic economic systems differ, we can develop a small toy model that captures the essential dynamics of both systems.

Define an economy as a set of activities A, processed through a chunking function C. This chunking function maps raw economic activities into discrete operational units according to different principles:

In a semiotic economy, the chunking function C_s maps the set of activities A to a set of signs S:

C_s: A → S

These signs are discrete but meaning-laden units—like "jobs," "tasks," or "products." The value generated in a semiotic economy (V_s) emerges from the relational networks between these signs and their cultural contexts:

V_s = f(S, R)

Where R represents the cultural, social, and institutional relationships that give meaning to the signs. This captures how economic value in human systems depends not just on the activities themselves but on their social interpretation and contextual meaning.

In a tokenized economy, the chunking function C_t maps the same set of activities A to a different set of units—tokens T:

C_t: A → T

These tokens are defined not by human meaning but by predictive utility—they might represent patterns like "gesture sequence X" or "output configuration Y" that have no intuitive human interpretation. The value generated in a tokenized economy (V_t) ties directly to optimization and predictive power:

V_t = g(T, P)

Where P represents predictive accuracy or computational efficiency. This captures how AI systems organize economic activities around patterns that maximize prediction rather than human meaning.

The key insight of this model is that the same underlying economic activities A can be chunked in fundamentally different ways, leading to different value generation mechanisms. Neither chunking is inherently "correct"—they organize the same reality according to different principles.

This model helps us understand why the transition from semiotic to tokenized economic organization might progress unevenly across different domains. The speed and extent of this transition will depend on the compatibility between the two chunking systems. We can represent this compatibility as the overlap between the partitions created by C_s and C_t:

Compatibility(C_s, C_t) = measure of alignment between S and T

In domains where signs and tokens naturally align—where human-meaningful categories happen to correspond closely with statistically predictive patterns—the transition will be relatively smooth and rapid. For example, in logistics, the human conception of "efficient delivery route" may align well with the AI token representing optimal path configurations.

Conversely, in domains where signs and tokens diverge significantly—where human meaning depends heavily on social context that doesn't translate to statistical patterns—the transition will face greater friction. In care work, for instance, the human sign of "compassionate care" encompasses complex social and emotional dimensions that may not map cleanly to any set of tokens based on observable behaviors alone.

This model predicts that AGI will transform economic activities most rapidly in domains where:

The tokenized chunking function C_t produces more precise or efficient organization than the semiotic chunking function C_s.

The compatibility between signs and tokens is relatively high, reducing friction in the transition.

The relational context R plays a less dominant role in value creation compared to predictive accuracy P.

By contrast, activities deeply embedded in cultural contexts and relational networks will resist tokenization, not because they can't be computationally represented, but because the value they generate depends on semiotic interpretations that aren't captured in purely predictive frameworks.

This formalization provides a first, provisional distinction: semiotic economies fundamentally derive value from shared stories, cultural contexts, and human meaning-making, while tokenized economies derive value from machine foresight, pattern recognition, and predictive optimization. The emerging economic landscape will be shaped by the interaction between these two systems, with some activities reorganized around tokens while others remain anchored in signs.

Concrete Examples: The Tokenization of Economic Activities

To make this abstract model concrete, let's examine specific examples of how tokenization might reshape economic activities:

Example 1: Content Creation and Curation

In the current semiotic economy, content creation is organized around distinctive roles: writers produce text, editors refine it, designers create visuals, and marketers promote the final product. Each role involves a cluster of tasks that are semiotically coherent—writing involves ideation, research, drafting, and revision; editing involves correctness checking, structural analysis, and stylistic refinement.

In a tokenized economy, these activities might be reorganized around entirely different patterns. An AI system might identify that certain types of ideation are statistically similar to certain types of image generation but differ from other forms of writing. The boundary between writing and visual design—clear in human semiotic categories—might dissolve as tokens regroup these activities based on statistical patterns.

We already see hints of this reorganization in tools like DALL-E and Midjourney, which treat textual prompts and image generation as part of a continuous process rather than separate domains. The human role in this ecosystem isn't simply "automated away"; it's transformed into prompt engineering—a hybrid activity that doesn't fit neatly into existing semiotic categories of writer, editor, or designer.

Example 2: Medical Diagnosis and Treatment

Current medical practice divides healthcare activities according to body systems (cardiology, neurology), intervention types (surgery, medication, therapy), and disease categories. These divisions structure everything from medical education to hospital organization to insurance coding.

A tokenized approach might identify entirely different patterns. AI diagnostic systems already show capability for identifying correlations between seemingly unrelated symptoms or treatment responses that cut across traditional medical categories. For instance, researchers have identified how deep learning models identified patterns in patient data that grouped certain cardiac and respiratory symptoms together based on shared underlying mechanisms not recognized in standard medical taxonomies.18 Tokenization may even resist the idea that the individual patient is a meaningful unit, and might want to treat the social network we are embedded in instead.

The redistribution of medical activities according to these tokenized patterns would require fundamental reorganization of healthcare delivery. New specialist roles might emerge around clusters of symptoms that AI systems identify as statistically related, regardless of the body systems involved. Treatment protocols might be organized around response patterns rather than disease categories.

Tokenization of Market Structures

Beyond individual economic activities, tokenization may fundamentally reshape market structures themselves. Traditional economic analysis divides the economy into sectors and industries based on products, production processes, or market relationships. These divisions reflect human semiotic categorization of economic activities.

AI systems might identify subtly different patterns of economic organization that optimize for prediction and efficiency. Consider how this might play out in vertical and horizontal market relationships:

Vertical Integration: Current theories of the firm explain vertical integration decisions based on transaction costs and contractual incompleteness. These theories assume boundaries between economic activities that align with evolving and changing human semiotic categories. Tokenization might identify different optimal boundaries that don't correspond to traditional distinctions between, for example, manufacturing and distribution.

Horizontal Segmentation: Industries are currently defined by product or service categories that make sense to human consumers and producers. A tokenized economy might reorganize production around patterns that humans don't naturally perceive as related—creating new combinations of products and services based on statistical relationships in consumer behavior or production processes.

Network Organization: Platform business models already demonstrate how digital technologies can reorganize economic activities around data flows rather than traditional product categories. Tokenization may accelerate this trend, with economic organization emerging from the statistical patterns identified by AI systems rather than predetermined industry boundaries.

This transformation suggests that concepts like "industry" and "sector" may become increasingly inadequate for understanding economic organization. Traditional economic statistics organized by NAICS or SIC codes might fail to capture the emerging patterns of a tokenized economy. New analytical frameworks may be needed to track economic activity organized around AI-identified tokens rather than human semiotic categories.

Tension and Hybrid Forms

The relationship between semiotic and tokenized economic organization won't be a simple transition from one to the other. Instead, we're likely to see complex tensions and hybrid forms as these different chunking systems interact.

Human semiotic categories won't disappear—they'll continue to structure how people understand and navigate economic activities. Legal and regulatory frameworks, educational systems, and social norms will maintain aspects of the current semiotic organization even as tokenization introduces new patterns.

The result may be a kind of hybridization or recombination of human and machine capabilities. Economic activities might be partially reorganized around tokenized patterns where these offer significant efficiency or predictive advantages, while maintaining semiotic organization where human interpretation and social coordination are paramount.

This tension creates new coordination challenges. How will human workers navigate organizations structured around tokens they don't intuitively understand? How will regulatory systems account for economic activities that don't fit neatly into established categories? How will educational systems prepare workers for roles that might be reorganized according to tokenized patterns during their careers?

These questions suggest that the economic impact of AI won't be determined solely by its technical capabilities, but by the complex socio-technical systems that emerge as tokenized and semiotic chunking interact. Understanding this interaction requires moving beyond simplistic automation narratives to consider how different ways of carving up the economic world might coexist and transform each other.

Criticisms and replies

The tokenization framework presented in this essay offers a novel lens for understanding how artificial general intelligence might reshape economic activities. Like any theoretical framework, however, it invites critical examination. In this concluding section, we address key criticisms that might be raised—particularly from classical economic perspectives—and suggest how these concerns might be addressed in future research.

Perhaps the most significant challenge to the tokenization framework is empirical testability. How can we measure the degree of tokenization in an economy? How might we empirically distinguish between tokenized reorganization and other forms of technological change?

These are valid concerns. Without clear empirical markers, the framework risks becoming a conceptual exercise without practical application. Future work should focus on developing observable indicators of tokenization processes. These might include:

Emergence of novel job categories that cut across traditional industry boundaries. Bessen (2019) documents how technological change creates new work categories rather than simply eliminating existing ones. A testable prediction of our framework is that tokenization will accelerate this process in distinctive ways, creating roles that combine previously unrelated skills according to patterns identified by AI systems.

Changes in productivity distributions within versus across traditional task categories. If the tokenization hypothesis is correct, we should observe increasing productivity variance within traditional job categories but decreasing variance within tokenized clusters of activities (which may cut across traditional categories). This pattern would differ from the predictions of skill-biased technological change models.

Evolution of organizational boundaries that defy transaction cost explanations. The tokenization framework predicts that firm boundaries may reorganize in ways that traditional transaction cost economics cannot explain. Empirical work could test whether AI-intensive industries show distinctive patterns of integration and outsourcing compared to what would be predicted by standard models.

These empirical approaches would help distinguish the effects of tokenization from other technological impacts, addressing concerns about falsifiability.

A second criticism might contend that the essay overestimates the degree to which AI will reorganize economic activities according to novel patterns rather than simply accelerating existing market-driven trends. Classical economists would argue that markets already optimize task boundaries based on transaction costs, production efficiency, and comparative advantage.

This criticism has merit but overlooks the distinctive nature of AI-driven tokenization. Traditional market forces operate through price signals interpreted by human agents with limited cognitive capacity, working within existing semiotic frameworks. AI systems, by contrast, can identify patterns across vastly larger datasets without the constraints of human conceptual categories.

Hayek's insight that markets efficiently coordinate distributed information remains valid, but AI systems change what kinds of patterns can be identified in that information. The tokenization framework doesn't claim that market forces won't shape AI's economic impact—rather, it suggests that market forces will increasingly operate through systems that perceive patterns humans cannot readily detect.

An empirical test of this distinction would examine whether AI-intensive industries show reorganization patterns that diverge from what would be predicted by standard models of market-driven specialization. For instance, do new combinations of economic activities emerge that would appear inefficient according to traditional transaction cost or comparative advantage analyses, but prove highly effective when organized around AI-identified patterns?

A third criticism might argue that the framework underestimates how quickly human semiotic systems can adapt to incorporate AI-identified patterns. If tokenized organization creates value, won't humans rapidly develop new semiotic frameworks to understand and work with these patterns?

This criticism rightly highlights human adaptability. Linguistic and conceptual systems do evolve to capture new distinctions and relationships. However, this adaptation faces several constraints:

Cognitive limitations in pattern recognition. Human cognition excels at certain kinds of pattern recognition but struggles with others, particularly high-dimensional statistical relationships that don't map to perceptual or causal intuitions. Some AI-identified patterns may remain persistently unintuitive to humans, as demonstrated by the "black box" problem in machine learning.19

Institutional inertia in semiotic systems. As North (1990) argues, formal and informal institutions change at different rates. Legal categories, educational credentials, and professional identities embedded in regulatory frameworks may adapt much more slowly than individual understanding. The "semiotic immune system" operates partly through these institutional structures.20

Path-dependent evolution of language and categories. Clark (2006) demonstrates how language and conceptual systems evolve in path-dependent ways, constrained by existing structures. New semiotic categories don't emerge in a vacuum but must connect to existing frameworks, potentially limiting rapid adaptation to radically different tokenization patterns.21

These constraints suggest that while human semiotic systems will certainly evolve in response to AI-identified patterns, this adaptation will be neither complete nor frictionless. The interaction between tokenized and semiotic organization will likely remain dynamic, with periods of misalignment driving economic and social change.

Synthesis and Future Research

These criticisms highlight important directions for developing the tokenization framework. Rather than viewing tokenization as replacing semiotic organization, future research should explore their co-evolution. Key questions include:

How might human semiotic systems evolve in response to AI-identified patterns? This requires interdisciplinary work connecting economics with cognitive science and linguistics.

What hybrid organizational forms might emerge that combine tokenized efficiency with human interpretability? Such structures might represent a new stage in organizational evolution beyond current models.

How can economic policy and education systems adapt to a world where relevant skill clusters no longer align with traditional categories? This has profound implications for workforce development and regulatory frameworks.

Can we develop economic statistics and measurement approaches that capture tokenized economic organization? Current industry and occupational classification systems may increasingly miss the actual patterns of economic activity.

The tokenization framework doesn't claim to replace standard economic analyses but to complement them by highlighting a distinctive aspect of AI's economic impact. Traditional models focusing on automation, skill-biased technological change, and productivity growth remain valuable. The tokenization perspective adds some insight into how the underlying organization of economic activities might transform in ways that these models alone cannot capture.

As artificial general intelligence continues to develop, the distinction between semiotic and tokenized chunking of economic activities may become increasingly consequential. By anticipating this transformation, we can better prepare our economic institutions, educational systems, and policy frameworks for a world where the boundaries between economic activities no longer align with our intuitive categories. This preparation requires not just technical adaptation but a fundamental rethinking of how we conceptualize, measure, and govern economic organization.

The greatest value of the tokenization framework may ultimately lie not in its specific predictions but in how it expands our conceptual toolkit for understanding technological change. By recognizing that AI systems don't just automate existing tasks but potentially reorganize the very categories through which we understand economic activity, we open new avenues for both theoretical insight and practical response to one of the most significant transformations of our time.

Tokenization is also fascinating from a purely philosophical angle, and that is something I will want to come back to. The tokenization of the world is a project that looks like the love-child of logical positivism and computer science - and it is a vastly ambitious philosophical project.

Thanks for reading,

Nicklas

See eg Sennrich, R., Haddow, B. and Birch, A., 2015. Neural machine translation of rare words with subword units. arXiv preprint arXiv:1508.07909 and Wu, Y., Schuster, M., Chen, Z., Le, Q.V., Norouzi, M., Macherey, W., Krikun, M., Cao, Y., Gao, Q., Macherey, K. and Klingner, J., 2016. Google's neural machine translation system: Bridging the gap between human and machine translation. arXiv preprint arXiv:1609.08144.

See Peng, F., Schuurmans, D. and Wang, S., 2004. Augmenting naive bayes classifiers with statistical language models. Information Retrieval, 7, pp.317-345.

See Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł. and Polosukhin, I., 2017. Attention is all you need. Advances in neural information processing systems, 30.

See https://a16z.com/why-software-is-eating-the-world/

See Baldwin, C.Y. and Clark, K.B., 2000. Design rules, Volume 1: The power of modularity. MIT press.

See

See Mielke, S.J., Alyafeai, Z., Salesky, E., Raffel, C., Dey, M., Gallé, M., Raja, A., Si, C., Lee, W.Y., Sagot, B. and Tan, S., 2021. Between words and characters: A brief history of open-vocabulary modeling and tokenization in NLP. arXiv preprint arXiv:2112.10508.

See Bostrom, K. and Durrett, G., 2020. Byte pair encoding is suboptimal for language model pretraining. arXiv preprint arXiv:2004.

See eg Toraman, C., Yilmaz, E.H., Şahinuç, F. and Ozcelik, O., 2023. Impact of tokenization on language models: An analysis for turkish. ACM Transactions on Asian and Low-Resource Language Information Processing, 22(4), pp.1-21. and Rust, P., Pfeiffer, J., Vulić, I., Ruder, S. and Gurevych, I., 2020. How good is your tokenizer? on the monolingual performance of multilingual language models. arXiv preprint arXiv:2012.15613.

See Alayrac, J.B., Donahue, J., Luc, P., Miech, A., Barr, I., Hasson, Y., Lenc, K., Mensch, A., Millican, K., Reynolds, M. and Ring, R., 2022. Flamingo: a visual language model for few-shot learning. Advances in neural information processing systems, 35, pp.23716-23736.

See Eco, U. (1976) 'A Theory of Semiotics', Indiana University Press.

See Deacon, T. (1997) 'The Symbolic Species', W.W. Norton & Company.

See Bourdieu, P., 1991. Language and symbolic power. Harvard University Press.

See Brynjolfsson, E., Rock, D. and Syverson, C., 2021. The productivity J-curve: How intangibles complement general purpose technologies. American Economic Journal: Macroeconomics, 13(1), pp.333-372.

See Rahwan, I., Cebrian, M., Obradovich, N., Bongard, J., Bonnefon, J.F., Breazeal, C., Crandall, J.W., Christakis, N.A., Couzin, I.D., Jackson, M.O. and Jennings, N.R., 2019. Machine behaviour. Nature, 568(7753), pp.477-486.

See Agrawal, A., Gans, J. and Goldfarb, A., 2019. Economic policy for artificial intelligence. Innovation policy and the economy, 19(1), pp.139-159.

See Perez, C., 2010. Technological revolutions and techno-economic paradigms. Cambridge journal of economics, 34(1), pp.185-202.

See Rajkomar, A., Oren, E., Chen, K., Dai, A.M., Hajaj, N., Hardt, M., Liu, P.J., Liu, X., Marcus, J., Sun, M. and Sundberg, P., 2018. Scalable and accurate deep learning with electronic health records. NPJ digital medicine, 1(1), p.18.

Which some take to be an argument for interpretable machine learning, see Rudin, C., 2019. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature machine intelligence, 1(5), pp.206-215.

See North, D. (1990) 'Institutions, Institutional Change and Economic Performance', Cambridge University Press.

See Clark, A., 2006. Language, embodiment, and the cognitive niche. Trends in cognitive sciences, 10(8), pp.370-374.

Fantastic essay, a lot of food for thought. I take that tokenization is very distinct from a semiotic framework and for sure, AI-first companies will be better positioned to reap the benefits from AI and may become "superstar firms" on their own. Just how deep this will transform the economy overall however remains to be seen though. A historical analogy could be that the industrial revolution also "tokenized" labour (e.g. the production of textiles) and this eventually created a whole new economic system and also modern democracies, so the impact of this transformation was huge!