Unpredictable Patterns #69: The Future of Free Speech

Musk (but only a little), the nature of rights, institutions, deliberation and discovery, edges and centering, places of speech and thinking about what it means to mean something

Dear reader,

This week we discuss free speech and content moderation a bit, and what models and analogies seem to capture us in this field. I hope you find it interesting. My own views only, as usual, but good to point that out, probably.

Free speech and democracy decoupled?

Oh boy, we have spent a lot of time talking about Elon Musk and Twitter. It is a topic that seems to be rivaling that of the Ukraine war and there is no one - myself included - without a take on the acquisition. And this for a micro-blogging service that has somewhere on the order of magnitude 200-400 million users. It is easy to dismiss this as the elites' all-too-predictable interest in themselves, or the proverbial storm in a glass of water, but that would miss a larger point. The interest in Twitter is closely connected to the interest in free speech and content moderation, and how the transformation of speech with the Internet will impact our society in the long run.

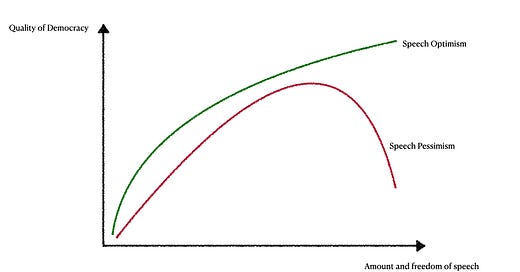

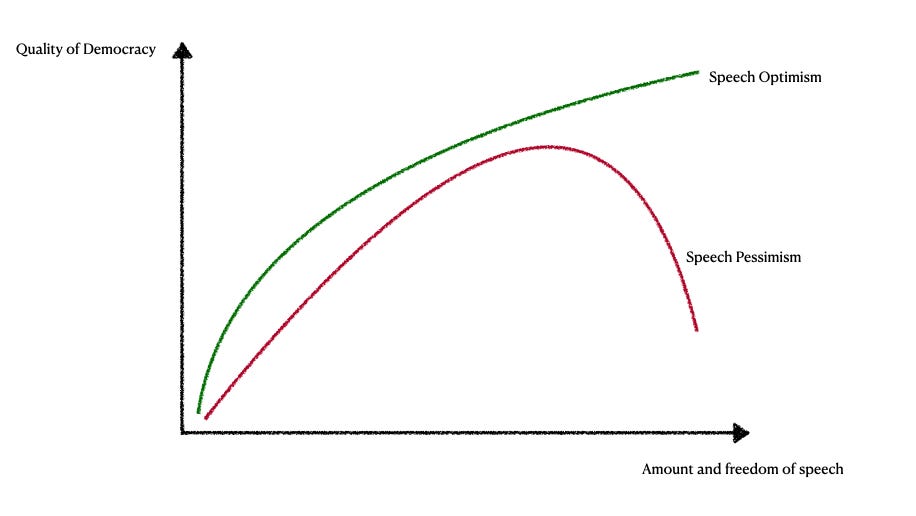

There are different views of the relationship between the amount and freedom of speech on one hand and the quality of democracy on the other. The speech optimists - early Internet enthusiasts among them - saw a direct improvement to the quality of democracy with more speech and a spoke about a democratization of speech.

Currently, the view has shifted drastically, perhaps even to a point where people feel that there is a breaking point where the amount and freedom of speech actually leads to a much less valuable democracy, and that the relationship is better described buy a reverse U curve than anything else.

So which is right? The answer here is that neither is right, because there is not a 2-dimensional flat relationship here. We need a much more complex and multi-dimensional model if want to make sense of this question. What we are lacking is not just evidence, but some conceptual clarity, we need to examine the core concepts in this debate much more closely.

Let's start with the idea of free speech or free expression. Why do we think this is a good idea? Assume for a moment that we do not get to just say that it is an inalienable right, but that we have to explore why it is a right - what would we say then?

There are at least two possible answers here.

The first is that we want free speech in a society because it maximizes exploration of new ideas and opinions, and ensures that we do not get stuck in old views. Free speech is an important adaptive strategy to a world that is changing, complex and always needs to be explored anew. Even old facts have a half life and so the way we make sure that we can keep evolving is that we try to maximize the discovery of new ideas.

Free speech, in this model, is a mechanism of discovery.

This view is best reflected in the "marketplace of ideas"-metaphor that underpins some of American thinking on free speech.

The second is that we want free speech because that is how we best resolve conflicts and work together in a society. Free speech is a mechanism that allows us to coordinate social efforts, allocate resources and minimize conflict while we maximize collaboration.

Free speech is, in this model, a mechanism for deliberation.

This view is perhaps best reflected in the idea of a "public sphere" and is, in many ways, a more European way of thinking about speech.

Free speech, then, serves to help us discover new ideas and deliberate as a society.

If we agree with this, we see why the 2-dimensional analysis will not hold. The problem we are facing is not one of too much speech, but an interesting variation on that: technology has radically increased our ability to discover but done very little for our ability to deliberate. So the problem is not one of amount or degrees of freedom, but rather a problem around the underlying mechanisms in which we can *use* speech.

It is worth commenting on a tension here that tends to obfuscate the discussion - and that is the tension between saying that something needs to be useful in order for it to be a right. That is not what I am saying - but I want to make a related point about the concept of a right as a use.

What is a right? What image do you have of a right? A right feels, sometimes, almost like a card that you can show if someone tries to stop you from doing something, like the detective that walks past the yellow crime scene tape: you flash your badge and the police backs down. You have a right to be there, to cross the line. A right, in this model, gives you the ability to enter the crime scene and walk across it - explore and examine it. But it does so not because of the card, but because of the institutions that the card is embedded in. The police recognize the card because they know that it signifies a certain role in the police force, and they also know that you have a certain experience, they know how the card is issued and what it means to use it.

The card is not the right - the right is the card plus the institutions, it is the use of the card.

In this model rights are embedded. They exist within certain institutions, and it is only in this framework that they make sense. Rights only exist as a part of a larger pattern of behaviour, anchored in institutions and practices - they are not social atomic facts. What determines, then, the impact of speech on democracy is not the amount or the degree of freedom, but the quality of the institutions in which the right to free speech is embedded.

The real question, then, is how we are thinking about the institutional framework for free speech - how we are ensuring that discovery mechanisms and deliberative institutions are changing and evolving to adapt to the fact that we are now speaking in a different way, using new technologies to express ourselves.

Institutional design projects

So how are we solving this question today? We are in fact in the midst of a large institutional design project, where the institutions of speech are being crafted - but we call it content moderation and the focus we have is on how to minimize harm, rather than maximize the utility, of speech.

We could even say that the discussion about content moderation is anchored in speech pessimism, rather than any real ambition to build a more useful and valuable right of free speech.

Now, that is perhaps unfair - but it seems clear that the discussion is less about how we can build new ways for qualitative deliberation on top of the greatly strengthened mechanisms of discovery that we have built, and more about how speech online can become less harmful. And that is a problem - for a couple of reasons.

First, the focus on minimizing the negative is unlikely to give rise to new institutions that provide positive value: you will get authorities that help you restrict speech and remove content, but will you get institutions that help develop new arenas for the public sphere? Will it encourage innovation around deliberation at scale?

Second, the focus on harm hides the fact that we are discussing the transformation of a right. Take the extreme example of those who compare "bad content" to pollution: is this a model that is likely to strengthen the right to free speech? The idea that "bad content" is a negative externality and needs to be regulated as such is likely to lead to risk-averse over-regulation rather than the careful exploration of the future shape of free speech.

Overall, the focus on harm is a focus on how old institutions can cope with new patterns of behavior, and will discourage change and adaptation. If we, instead, suggest that these new patterns can be strengths if embedded in the right framework - then we would start looking for new institutions for new patterns of behavior.

Now, if we approach the current discussion about content moderation as a civilizational design project for a new right of free speech (even if that sounds impossibly grand) we also would probably pause at the transfer of power to private entities. The current regulatory models almost uniformly seem to suggest that it is private companies' role to ensure that the new patterns of behavior fit into the old institutions - and to do so from a perspective of harm and risk.

To be clear, I am not troubled that private entities are asked to do this -- that was the case for earlier phases in the development of free speech as well. The newspapers were asked to clean up an industry that was as messy and filled with questionable speech as the Internet is. In fact, if you were to do quotas and ask what percentage of early journalism was problematic and compare that to the quota of problematic content on the Internet -- there would be no competition. The vast majority of everything that is on the Internet is unproblematic content, and it is worth keeping that in mind.

No, private entities can do this - and it seems to be the preference if we read the legislation - but they do so under a general assumption of what success looks like.

The press knew that they were asked by society to curate an important right and make it work for public debate and discussion. Is that what we are asking the platforms to do as well? To curate an important right and maximize the benefit from that right? Or are we just asking them to minimize the cost? If the latter, and it does not seem to far-fetched to suggest that this is where we are, why? Why would we miss this opportunity to transform free speech again - just like it has been transformed before in our history?

It is useful to explore a counterfactual here: what would the world have looked like if the early press was met with the same attitude, same regulatory frameworks, prohibitive fines and monitoring duties that now face the platforms - how would our societies have developed then?

Except for the spirit in which we approach the issue, there is also another conceptual problem here - and that is that we seem to think that content is the right focus. That we should focus on individual pieces of content and seek to decide what stays up and what comes down. This is such a simplistic model that one wonders how we ended up here - do we really think we can answer the question about how free speech is transformed in the age of no-cost, global expression by setting out rules for how much of a nipple that can be visible before it is pornography?

The focus on content has probably slowed down what needs to be a much deeper conversation by a decade or more. How did we end up here?

Edges and centers

One answer is that it is actually the platforms that are responsible for this - through a subtle design choice early on. Platforms decided - for different reasons - that filtering would happen at the center of the network, not the edges. In fact, early on the Internet explored filtering at the edges as a much better solution - you could download software or just adapt your settings to filter out certain content, sites or even entire countries.

W3C and other standards organizations engaged in developing filtering standards, but the early attempts - like the Platform for Internet Content Selection (PICS) - quickly came under heavy criticism because of the categories that they developed, and because of the general effect on speech on the Internet that people suggested such filtering would have.

At the time, the prevailing view was that speech on the Internet should be absolutely free and the sentiment that underpinned the debate was that of cyber libertarian free speech maximalism. The idea of a filtering standard at the edges was condemned as a secretive, behind the scenes way to remove content. And a lot was made of the government's ability to use edge filtering to impose filtering on their citizens - because any point in the network that can be effectively controlled outside of the center can be thought of as an edge, after all.

This, and the desire from early platforms to control what they hosted (and, if we are completely honest, a belief that they were better judges of this than anyone else) led to a complete shift to filtrering at the center, at the platform, rather than the edges.

If we, again, entertain a counterfactual, we can ask what the world would have looked like if filtrering at the edges would have won. There are two different scenarios here we need to think about.

First, a scenario in which governments indeed did what the people opposing PICS feared and used edge filtering standards to filter speech on the Internet. That would have led to earlier filtering of the Internet, but it would also have shifted the onus on what was filtered out from the private platforms to public authorities deploying the filters. There would be political accountability for all filtrering decisions and very little of the kind of varied application of content moderation that we see today. Arguably this would also have ushered in the age of end-to-end encrypted messaging apps much earlier as well.

Second, a scenario in which the edge filtering was left to individuals or communities. In this scenario people could pick white lists or black lists and so curate what content they were exposed to. This is a scenarion in which individual users have accountability for what they choose to consume, and there is a much stronger sense of agency and responsibility at the individual level. Filtering companies would have to offer a broad array of different configurations and individual choice would determine the degree to which you were exposed to offensive or harmful content.

Would this have been much better or much worse? It is hard to say - but I do think that there are stable and robust social equilibria for both filtering at the edges and filtering at the center - and because of a series of quite early decisions we ended up filtering at the platform center rather than building out filters as individual settings.

It is worth noting that the fear that some governments would use filtering software was realized even if the filtering standards did not pass. So the choice was never really one of having an unfiltered internet or not, it was a choice between having filtering at the edges or at the platforms in open societies. That was not reflected in the heated debates so much because they were still held in a spirit of exuberant optimism.

Now, when the debate shifts to focus on filtering at the center, then the natural focus is individual pieces of content - and in order to do content moderation at scale hard and fast rules are absolutely necessary. So rather than discussing new arenas for speech the platforms ended up making hard calls around hard cases - and being judged by those hard cases in the public eye, the vasts benefits to public dialogue and discovery obscured by a comparatively small set of videos consumed by very few.

Threads to pull at?

But are there alternatives? Could we propose new models that would allow us to salvage the benefits of the Internet for the transformation of free speech as a right? Can we build new institutions rather than awkwardly try to impose old institutions?

I think the answer is yes, but would also note that relatively scarce resources are spent on this problem as companies are gearing up to build compliance teams and figure out how to apply the regulatory reforms that focus on harm and misinformation. If we are serious about this we need to change the way we think about the problem. Today, if we allow but a little simplification, the question we are asking is some version of:

How can we reduce the harm of online speech and make it safe?

We could also be asking the question:

How do we unlock the potential value of online speech through new innovations and institutions?

The second question is not a cyber libertarian question, or even a very optimistic question. It is a question that assumes that there is an upside to a development in which millions of people have suddenly been invited into the public sphere.

There are no simple answers to the second question - but there seem to be a few points worth exploring, at least.

First, we should think less about content and more about spaces. We focus a lot on what can be said, and less on where it is said - but that very blindness is now displacing a lot of the debate into end-to-end encrypted dark channels where there is no longer any ability for us to follow the arguments, conspiracy theories and the political arguments of those that consider themselves left behind. We need to think hard about what the best division of the free speech landscape into spaces looks like, and start asking where what can be said - because free speech is localized, it occurs in a space and on an arena.

That we are blind to this today is also obvious through the often made mistake where we equate being able to post something to a platform with being able to share it on the Internet. Even if we feel that not being able to post on a platform is the same as not being able to speak, we need to explore the conditions under which this really holds true. When is a platform a public spaces? And is the right to free speech the right to speak in a class of spaces? The debate about speech and reach has at least opened up this question.

One key question is if it would be valuable to have open spaces, accessible to all, where anything could be said - just to ensure that it is said in the open and not in encrypted spaces where debates can become echo chambers much faster. Should we prefer unmoderated open spaces to unmoderated encrypted spaces? Because that is the choice - it is not a choice of whether to moderate or not.

This is actually an old idea: Simone Weil suggested in the Need for Roots that we should have a space where anything can be said, but if it was meant and intended, well then the speaker should be held responsible.

At first glance this seems laughable - how on earth would we know if someone meant something? Our information landscape is flat and no one can determine intentionality, right? Well, we can create a rugged information landscape with blockchain models, where you can publish something irreversibly connected with your name. You could quite literally go on the record, and you could imagine a space designed as "The Record" where we publish only those things we really mean, and are happy to be held responsible for.

Second, we need to have a deeper conversation about what a right is. We cannot just pretend that rights are divorced from or can be completely decoupled from institutional frameworks. When JS Mill defends free speech he is defending press freedom - and when we disconnect his eloquent defense and use it to defend speech without any reference to institutions, we misunderstand the fact that the reason he could defend speech so superbly was that it was embedded, at the time, in the institution of the press.

If we agree that rights are patterns we can engage in, within the framework of institutions, we need to figure out how rights change when the institutions are weakened and shift in importance. And the question should not be if we should abandon the right, nor if we should continue to defend it as if it was still embedded in that old crumbling institutional edifice - but how we evolve our institutions and the right itself.

If we do that there is no question in my mind that the right to free speech or expression in our age will be orders of magnitude more valuable and powerful than in John Stuart Mill's time - but if we disconnect it from any institutional embedding it is likely to be chipped away at in the name of harm until there is just a shadow left.

Third, it is still worthwhile exploring filtering at the edges - as a response to the question about harm - and perhaps suggest that the mandating of such filtering should be a political decision. This, and any other way, in which the regulation of speech forces some political accountability would balance the development of new speech standards.

Voluntary filtering at the edges is already being developed in kids' versions of different platforms - and it is no secret that other users or communities than kids can use them - but there is no harm in allowing individuals to much more in detail determine what they want to see. At some point such filtering actually becomes the helpful service of curation, in a world of exploding information and scarce attention there is a business model there as well.

Fourth, we need to discuss the long term development of content moderation. The same principles that underpin content moderation are now slowly seeping into the lower levels of the stack as well -- and we move from content moderation to participant moderation, where services that typically do not deal with publishing content are asked to perform moderation that is analogous to content moderation - leading to binary decisions of whether or not someone should have access to basic services like web site hosting, e-commerce or any other SaaS platforms.

If we allow the idea of content moderation to become the normative model for how we think about abuse of Internet services, we are likely to end up centralizing participation filtering and that can quickly grow to a permission based ecosystem, where we lose a lot of the advantages from an open Internet.

This also means that we should not accept that metaphor up front when we discuss other services than content platforms. We should be clear that the analogy is a bad one, and it is not about where in the stack we do content moderation - it is about who gets to participate in using the Internet fully. The suggestion that it is a question of moderation implies that tweaks can be made, but for any level lower than the content level there are now tweaks - you either participate or you do not.

The whole model of "content moderation" is questionable and we need to find better models, focused on the capabilities users are afforded and their patterns of behavior, to analyze the challenges we are facing and find new solutions to capture the value of the Internet - understanding spaces of speech much better, as well.

Finally, we need to balance the focus on harm with a focus on benefits. Yes, arguably this is a pendulum movement from the early days when everything was brilliant with the Internet, and a correction was certainly in the cards, but the way we are approaching things today is approaching an assumption of a net cost to the Internet, and that is hardly realistic. If you think it is, arguably the burden of evidence rests with you.

Thanks for reading!

Nicklas

"Overall, the focus on harm is a focus on how old institutions can cope with new patterns of behavior, and will discourage change and adaptation" <-- my favorite bit.

"[...] you could imagine a space designed as "The Record" where we publish only those things we really mean, and are happy to be held responsible for."

Isn't that begging the question to "how on earth would we know if someone meant something"? Willingness to be held responsible for a speech act is obviously (to me at least) not a sufficient condition to determine if a speaker meant something. But what makes this an even harder philosophical problem is that we can deceive ourselves about our own views. Language games are often cheap and skin deep. I might say "I really mean it!" to convey something about my conviction, but in reality I'd never act on the range of implications of holding that view. (Life being absurd is a fun example - see Nagel's paper "The Absurd")

For what it's worth, I'm still sympathetic to the optimist view that there's a game-theoretical space where more speech creates social value. We internet optimists focused a lot on the availability of speech but didn't really think deeply enough about the underlying psychology or incentives and motivations for speech acts.